$reader = New-Object -ArgumentList $pdf.FullNameįor($page = 1 $page -le $reader. You can run with this to rename files if matches are found, move them to categorized folders, and the likes.ĮDIT: Github page for itextsharp indicates it is end-of-life and links to Itext7 (dual licensed as AGPL/Commercial software, seems free for non-commercial use.) Add-Type -Path "C:\path_to_dll\itextsharp.dll"

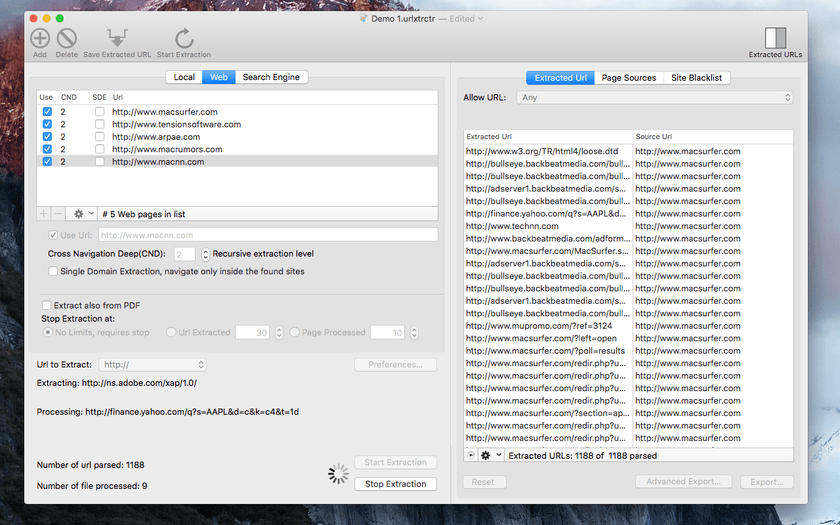

Pdf url extractor grep pdf#

The below evaluates the text on each page of each pdf for keywords, then exports any matches to a csv. However I get a bunch of these errors: An object at the specified path C:\filename.pdf does not exist, or has been filtered by the -Include or -Exclude parameter. As of now I've tried the following: get-childitem -Recurse | where The crucial point here is that the keyword will be in the content (body of a pdf, cell of an excel etc.) and not in the filename. I would like to have a powershell script that basically executes a series of scripts looking for all the files of a certain format containing specific keywords and outputting each list to a separate csv. The idea is that, for a certain format and a certain type, I know what keywords to look for in the file's contents. I'm interested in creating a catalogue by type.

The files are in no particular order and might as well be looked at as a single list. The process of pdf to url conversion can take a some seconds or minutes depending on the size of the file you are converting. Press the green button 'convert' and wait for your browser to download the url file that you have converted before.

Each file can be defined as a certain type (ex: product sheet, business plan, offer, presentation, etc). To convert pdf to url press the 'browse' button, then search and select the pdf file you need to convert. I have a huge mess of files (around ten thousand) of various formats.

0 kommentar(er)

0 kommentar(er)